Streamlining AI Code Review with GitHuman

How Automated Feedback Loops Transform Manual Code Review Bottlenecks into Seamless Iterative Workflows

Before you spend another hour configuring n8n nodes, pause for a moment.

We know what it feels like to have the perfect workflow in mind — and then hit the setup wall.

Dragging nodes.

Reading API docs.

Testing connections.

Wondering if you’re doing this the “right way.”

After watching dozens of developers build the same workflows from scratch, we noticed something.

The hard part isn’t knowing what to automate. It’s the manual translation from idea to working n8n workflow.

That’s why we built Autom8n.

✨ From description to deployment in minutes

Tell us what you want to automate.

Get a complete n8n workflow — nodes configured, connections set, ready to deploy.

No more setup hell. No more node-by-node building.

Before you open another API doc or drag another node...

Hey there,

AI tools are revolutionizing how we write code for side projects, generating complete functions, components, and entire modules in seconds, yet creating a critical bottleneck when you’re working alone with limited time.

Traditional code review practices assume access to teammates who can understand the thought process behind implementation choices. As a side project developer juggling full-time work and personal commitments, you can’t afford to spend hours manually reviewing AI-generated code when you have only evenings and weekends to make progress. The code often appears syntactically perfect but may contain logical flaws, incorrect assumptions, or security vulnerabilities that aren’t immediately apparent.

The githuman-review skill addresses this specific challenge by providing an automated review process tailored for busy side project developers.

Understanding the Unique Challenges of AI Code Review

Reviewing AI-generated code presents fundamentally different challenges than reviewing code written by human developers, especially when you’re a side project developer working alone without team support.

Traditional code reviews rely heavily on understanding the developer’s intent and reasoning behind implementation decisions through team collaboration. When working on side projects, you don’t have teammates to ask about implementation choices, making it harder to determine if AI-generated code truly meets your project’s specific requirements or contains hidden assumptions. Unlike team-based development, side project developers must independently validate every piece of code while managing multiple responsibilities. Additionally, AI models may generate code that looks syntactically correct but contains logical errors, security vulnerabilities, or implementation patterns that don’t fit your side project’s architecture.

Recognizing these differences helps side project developers adapt their review practices to effectively validate AI-generated code while maximizing limited development time.

Best Practices for Reviewing AI-Generated Code

Effective review of AI-generated code requires a shift in focus from syntax and style to logic verification and requirement validation, ensuring the code performs as intended for your side project.

As a solo developer with limited time, you should prioritize examining the underlying logic and your project’s specific requirements rather than spending time on detailed formatting issues. The polished appearance of AI-generated code can create a false sense of security, masking potential logical errors or incorrect assumptions that could derail your side project’s progress. You must verify that the AI correctly interpreted your requirements and that the code handles edge cases appropriately without requiring extensive manual testing. Additionally, focus on quick validation techniques that confirm functionality works as expected for your side project’s specific use cases.

Adopting these practices helps ensure that AI-generated code meets your side project’s quality and security needs while maximizing the time-saving benefits that make AI tools valuable for busy developers.

Critical Mistakes Side Project Developers Make When Reviewing AI-Generated Code

Mistake #1: Misunderstanding the purpose of AI-generated code

Many side project developers approach AI-generated code with the same expectations as human-written code, leading to inappropriate review standards.

Reviewers often expect AI to follow the exact same coding patterns and architectural decisions that humans would make. AI models generate code based on statistical patterns from training data, which may differ from team-specific practices. This mismatch in expectations can lead to rejecting perfectly valid AI-generated solutions. Understanding that AI code should be evaluated on functionality and correctness rather than similarity to human approaches is essential.

Recognizing this difference helps reviewers apply appropriate evaluation criteria to AI-generated code.

Mistake #2: Focusing solely on syntax instead of logic

Reviewers tend to concentrate on formatting and style issues when examining AI-generated code, missing critical logical flaws.

AI-generated code often looks syntactically correct but may contain subtle logical errors or incorrect assumptions. The polished appearance of AI code can mask fundamental problems with algorithmic correctness or business logic. This visual appeal creates a false sense of security that prevents deeper inspection of the code’s actual functionality. Effective review requires looking beyond surface-level syntax to examine the underlying logic and data flow.

Proper review of AI code demands deeper scrutiny of functional correctness over superficial formatting.

Mistake #3: Assuming AI code is always optimized

Developers often accept AI-generated code without questioning its performance characteristics or resource usage.

AI models prioritize correctness and readability over performance optimization during code generation. The generated code may include inefficient algorithms, unnecessary computations, or suboptimal data structures. Without performance testing, these inefficiencies can create bottlenecks in production systems. Teams must evaluate AI code for performance implications just as they would with human-written code.

Performance evaluation should remain a critical component of AI code review processes.

30 Minutes -> 5 Minutes With AI

Implementing the GitHuman review process for AI-generated code dramatically reduces the time needed to conduct thorough code reviews, transforming a potentially 30-minute manual task into a 5-minute automated process that fits perfectly into a side project developer’s busy schedule.

The githuman-review skill streamlines the entire review workflow by automatically checking for staged changes, starting the GitHuman server when needed, and guiding developers through an interactive review process. This automated approach eliminates the manual setup typically required for code reviews, allowing busy side project developers to focus on the substantive aspects of validation rather than tool configuration. The skill intelligently detects if GitHuman is already running, preventing port conflicts, and seamlessly integrates with the existing Git workflow. Most importantly, it creates a feedback loop where reviewers can approve changes directly through the web interface or request modifications that are then automatically incorporated into the code, saving precious time for your side project.

Using this skill transforms AI code review from a time-consuming manual process into an efficient, standardized procedure that maintains quality while accelerating development velocity - perfect for developers who can only work on side projects during evenings and weekends.

How to Use the githuman-review Skill

The githuman-review skill automates the entire GitHuman review process. This tool is specifically designed to fit into busy schedules and maximize your limited side project development time. To implement this in your workflow:

Activate the skill by typing

/githuman-reviewin Claude CodeThe skill will automatically detect any staged changes in your repository

It will start the GitHuman server (or connect to an existing instance)

Navigate to the provided web interface to review your AI-generated code

Approve changes or request modifications directly through the interface

The skill handles the rest of the workflow automatically

The skill is already available in your Claude Code environment and can be used immediately to streamline your AI code review process, giving you more time to focus on building and improving your side project.

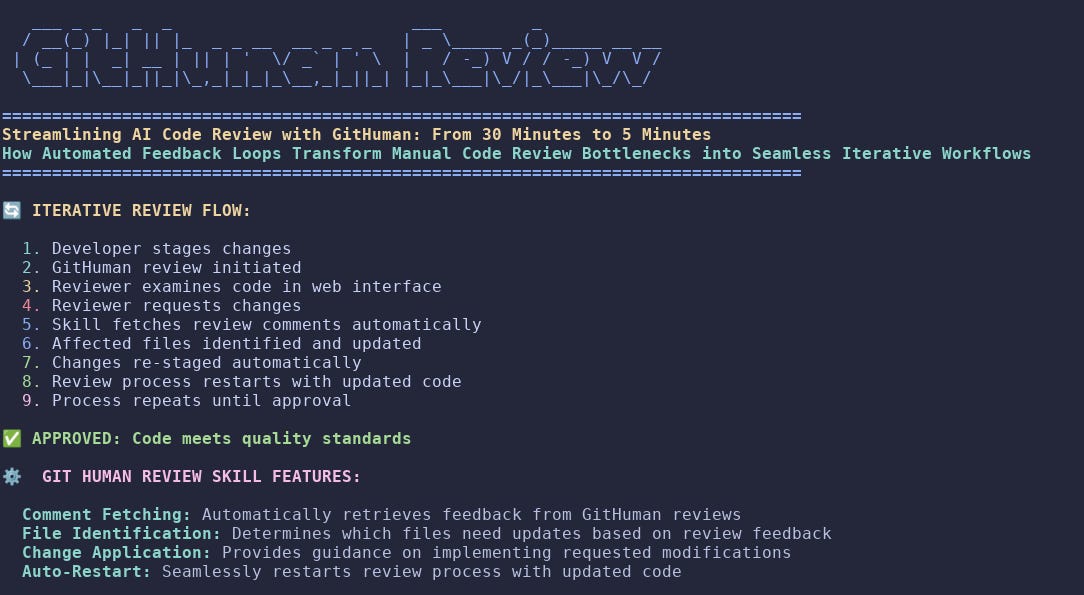

Iterative Review Process with Change Requests

One of the most powerful features of the updated githuman-review skill is its ability to handle iterative reviews when changes are requested. When reviewers identify areas for improvement in the GitHuman interface and mark the review as “changes requested”, the skill performs the following actions:

Automatically fetches comments: The skill retrieves all specific feedback and requested changes from the GitHuman review

Analyzes feedback: It parses the review comments to understand exactly what modifications are needed

Identifies affected files: The skill determines which files require updates based on the review feedback

Facilitates implementation: It provides clear guidance on how to implement the requested changes

Auto-restarts review: Once changes are made and staged, the skill automatically restarts the review process with the updated code

You can download the skill here.

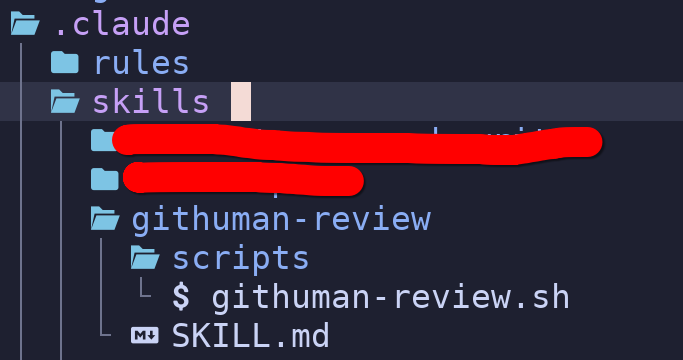

Add it to your .claude folder like this:

We will release it in the skills hub at some point but for now, we wanted to give it to our subs first. You can see we masked other skills names, the reason for that is we plan to release new skills in the following weeks, so no SPOILERS.

This iterative process continues seamlessly until the code meets the required standards, creating a closed-loop feedback system that significantly reduces the manual overhead of managing multiple review cycles.

Benefits of Using GitHuman for AI Code Review

Benefit #1: Automated error detection and security scanning

GitHuman automatically identifies potential security vulnerabilities and common errors in AI-generated code, saving developers from manual inspection of each line.

The automated scanning catches security issues that might not be immediately obvious to a busy side project developer. Traditional manual review processes often miss subtle security flaws or common vulnerability patterns that AI models might inadvertently introduce. GitHuman’s systematic approach ensures consistent application of security standards across all code submissions. This automated layer of protection helps protect both the developer’s time and the project’s security posture.

Implementing automated security scanning creates a safety net that catches issues before they become problematic in production.

Benefit #2: Standardized review criteria for consistent quality

GitHuman applies consistent review standards to every code submission, ensuring that quality expectations remain uniform regardless of the developer’s fatigue level or time constraints.

Consistent review standards eliminate the variability that comes with manual reviews, especially when developers are tired after a full day of work. The tool ensures that every piece of AI-generated code receives the same level of scrutiny, preventing important issues from slipping through during late-night coding sessions. This standardization helps maintain quality even when development time is limited to evenings and weekends. The predictable review process also helps developers understand exactly what to expect from the review process.

Standardized criteria provide reliable quality assurance that doesn’t fluctuate based on external factors.

Benefit #3: Faster iteration cycles for rapid prototyping

GitHuman accelerates the feedback loop between code generation and validation, enabling rapid iteration that’s essential for side project development.

Quick feedback enables developers to experiment with different approaches without waiting for lengthy review processes. The shortened iteration cycle encourages experimentation and innovation, allowing developers to try multiple solutions rapidly. This acceleration is particularly valuable for side projects where momentum and frequent progress are crucial for maintaining motivation. Faster iterations mean more learning and improvement in less time.

Rapid feedback loops keep development momentum high and encourage continued progress on side projects.

Conclusion

The githuman-review skill transforms AI code review from a potential bottleneck into an efficient, standardized process that maintains quality while maximizing the limited time side project developers have available.

Traditional code review practices struggle to accommodate the unique characteristics of AI-generated code, often requiring extensive manual processes that side project developers simply can’t afford. The githuman-review skill addresses these challenges by providing automated validation and structured review interfaces that focus on the specific concerns of AI-generated code, saving precious hours that can be spent building features for your side project. Solo developers implementing this approach report significant improvements in both code quality and development speed, with the added benefit of consistent evaluation criteria across all AI-generated code submissions.

Embracing specialized tools like GitHuman for AI code review represents a crucial evolution in development practices that helps side project developers get from idea to first user faster while maintaining the quality that leads to sustainable growth and user retention.

PS... If you’re enjoying ShipWithAI, please consider referring this edition to a friend.

And whenever you are ready, there are 2 ways I can help you:

1. AI Side-Project Clarity Scorecard (Discover what’s blocking you from shipping your first side-project)

2. NoIdea (Pick a ready-to-start idea created from real user problems)

AI code review is one area where automation actually delivers value. Not perfect, but valuable.

I've been using AI coding agents (https://thoughts.jock.pl/p/claude-code-vs-codex-real-comparison-2026) and automated review catches things humans miss—patterns across files, security issues, style inconsistencies.

But it also flags false positives constantly. The signal-to-noise ratio needs work.

Your streamlining approach makes sense. The question is: how do you tune the AI to catch real issues without drowning teams in noise?

Have you found a good balance between thoroughness and usability?